|

|

|

|

|

- [2025.02] two papers have been accepted by the CVPR 2025.

- [2024.09] two papers have been accepted by the NeurIPS 2024.

- [2024.07] one paper has been accepted by the SIGGRAPH Asia 2024 (TOG).

- [2024.02] two papers have been accepted by the CVPR 2024.

- [2023.12] one paper has been accepted by the AAAI 2024.

|

|

|

|

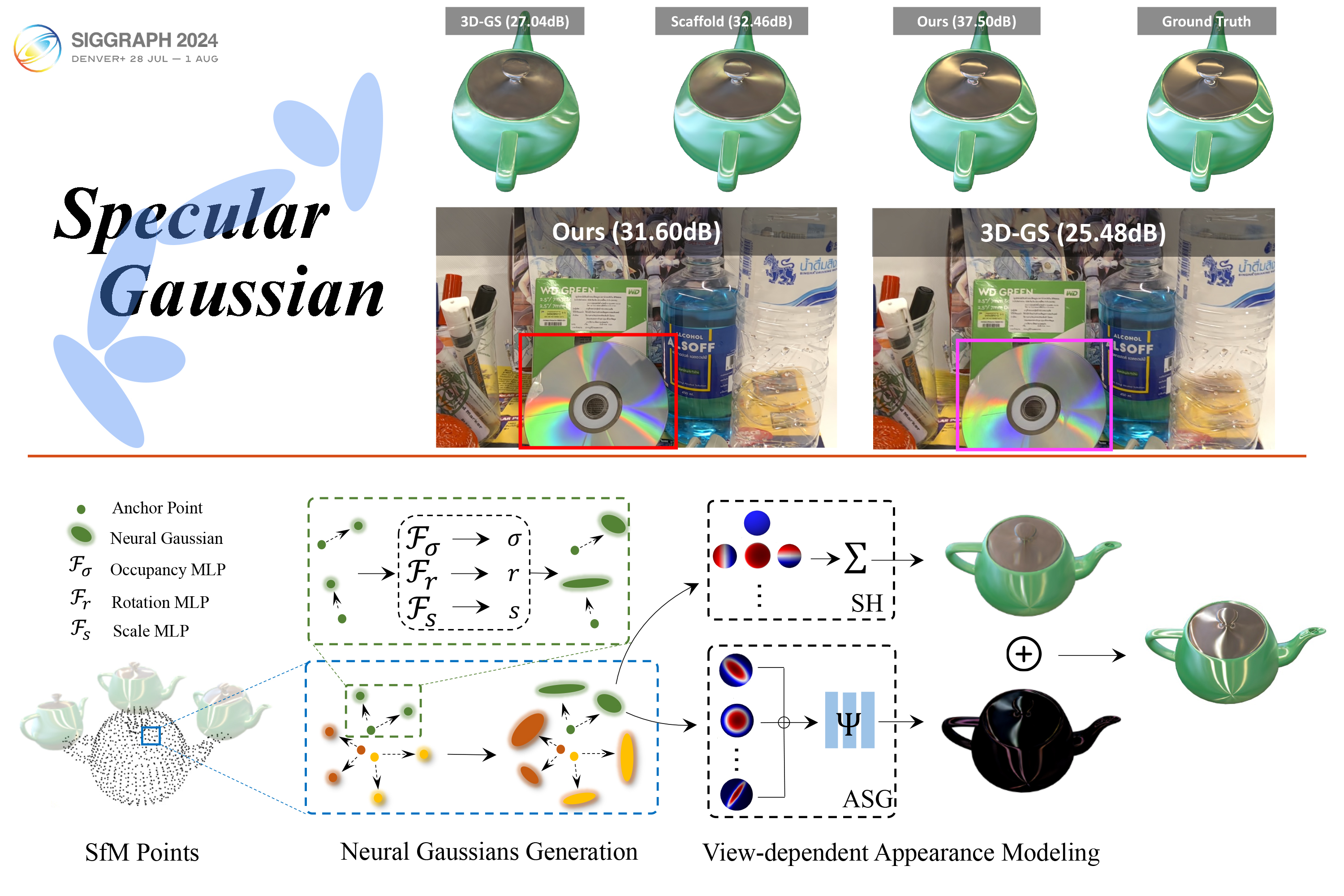

Spec-Gaussian aims to tackle scenes with specular highlights and anisotropy. The key idea is to employ the ASG appearance field instead of SH to model the appearance of 3D Gaussian. |

|

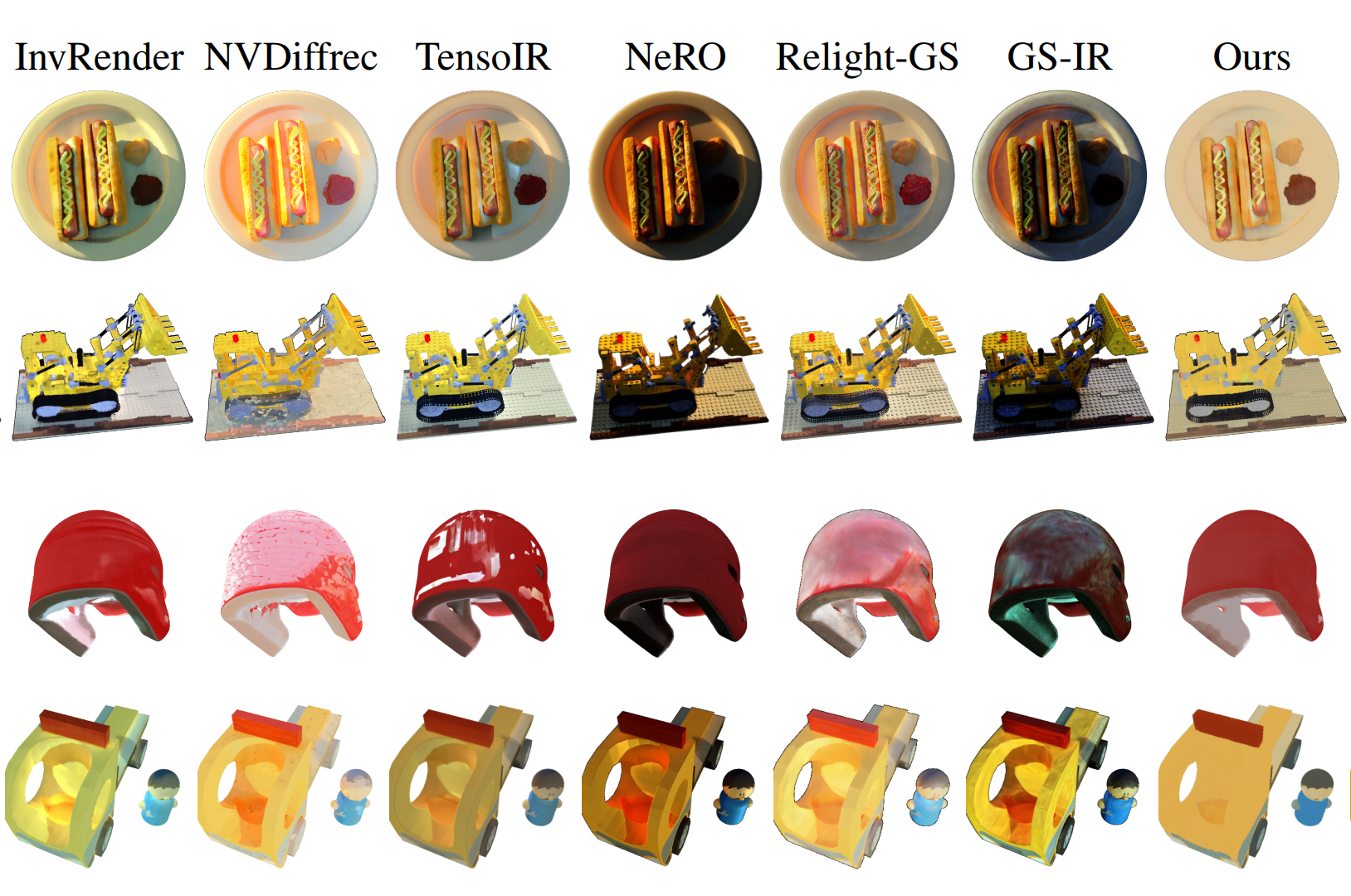

We propose a novel neural field-based inverse rendering framework for high-illumination scenes. We employ a scene-specific ACES tone mapping and regularized visibility estimation to eliminate the shadow in the PBR materials. |

|

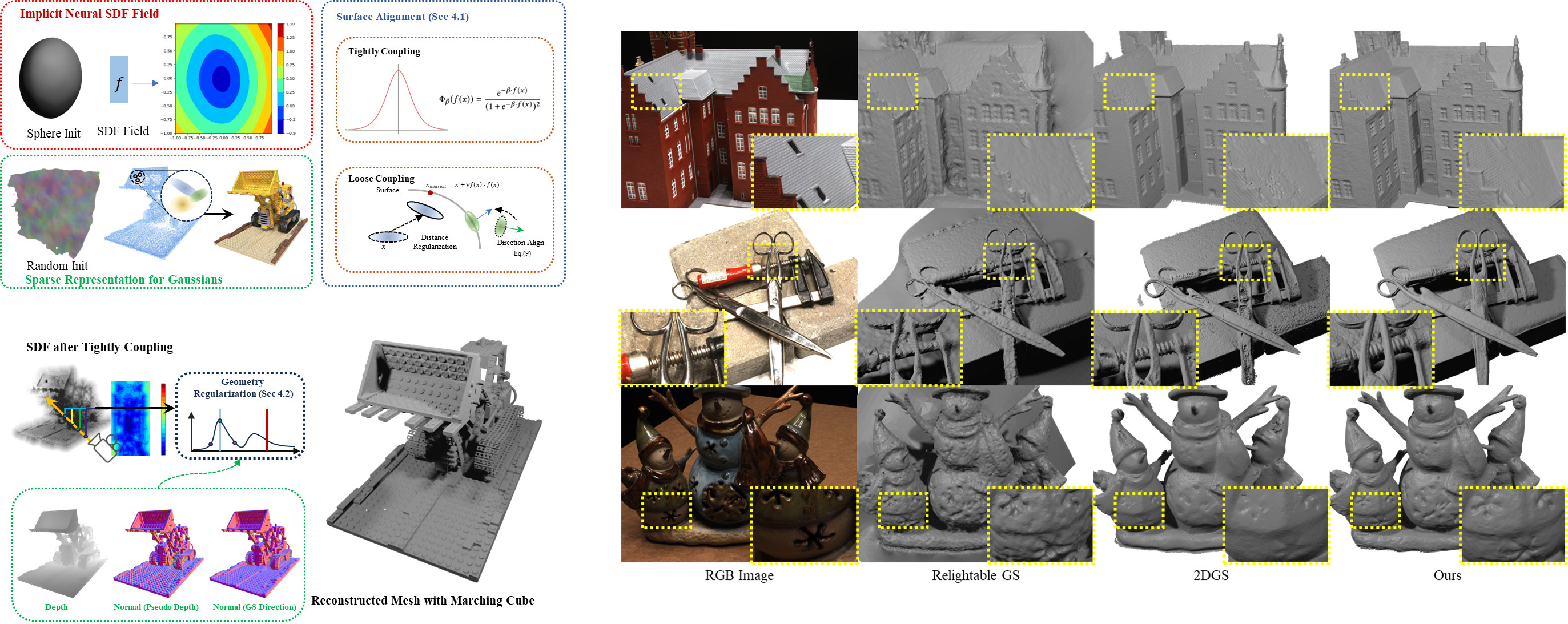

We propose a joint reconstruction technique coupling a GS and neural SDFs to achieve high quality reconstructions. |

|

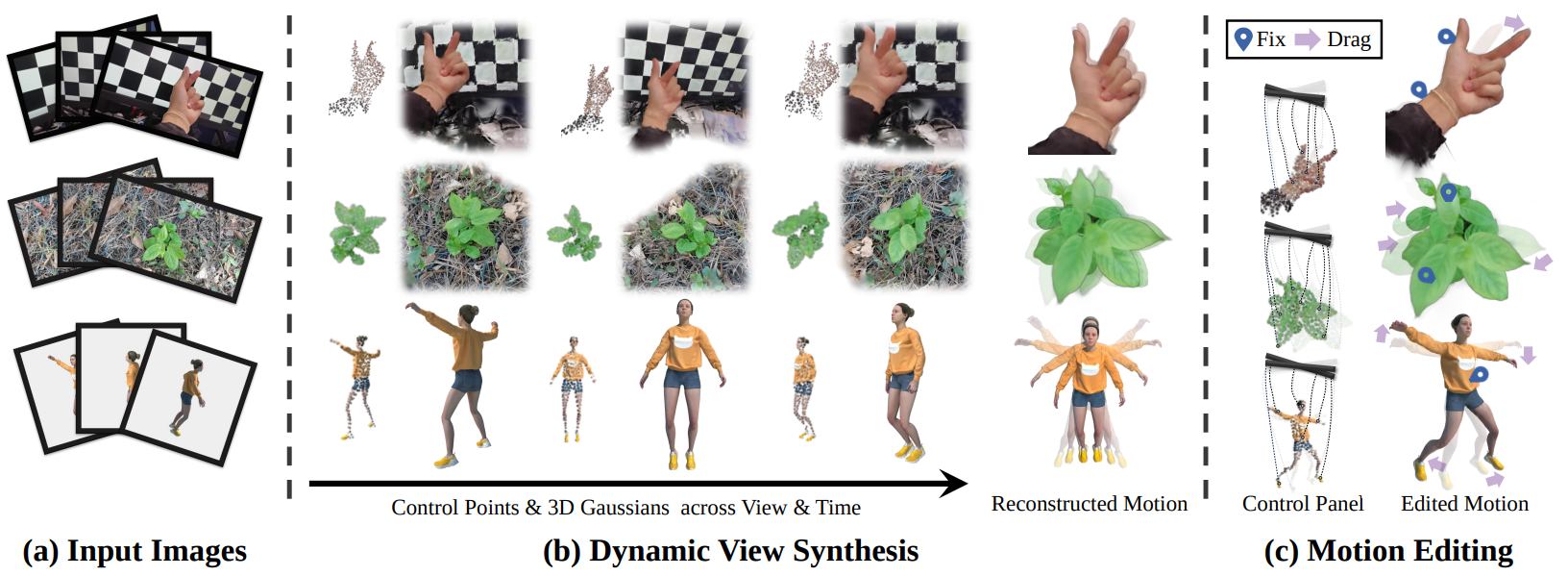

We propose a new representation that explicitly decomposes the motion and appearance of dynamic scenes into sparse control points and dense Gaussians, respectively. Our key idea is to use sparse control points, significantly fewer in number than the Gaussians, to learn compact 6 DoF transformation bases, which can be locally interpolated through learned interpolation weights to yield the motion field of 3D Gaussians. Please visit project page for more demos. |

|

The first deformation-based Gaussian splatting for dynamic scenes. We propose a deformable 3D Gaussian Splatting that reconstructs scenes using 3D Gaussians and learns them in canonical space with a deformation field to model monocular dynamic scenes. We also introduce an annealing smoothing training to mitigate the impact of inaccurate poses in real-world datasets. |

|

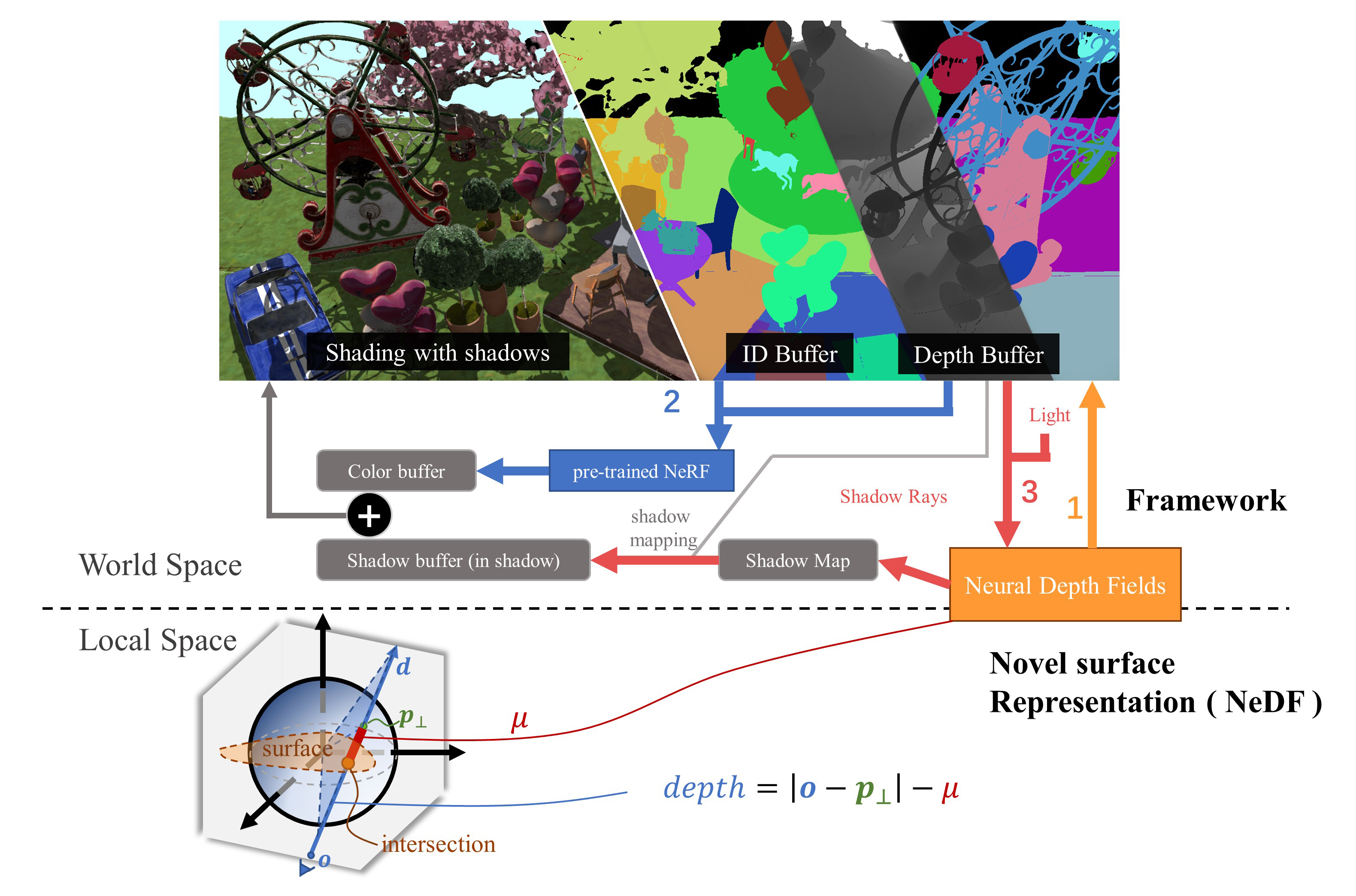

We propose a general implicit pipeline for composing NeRF objects quickly. |

|

|

Last update: 2024.10.09. Thanks. |